In early December, EurIPS 2025 brought Europe’s AI community together in Copenhagen for a week of intense discussion, exchange, and reflection on the future of artificial intelligence. Against a backdrop of rapid technological acceleration and growing societal concern, ELIAS took part in the conference with a dual presence: engaging with the ecosystem at the Start-Up Village and supporting the organisation of the “Rethinking AI — Efficiency, Frugality, and Sustainability” workshop.

Together, these two strands captured a central tension shaping today’s AI landscape: how to foster innovation and opportunity, while also confronting the environmental, social, and cultural consequences of AI at scale.

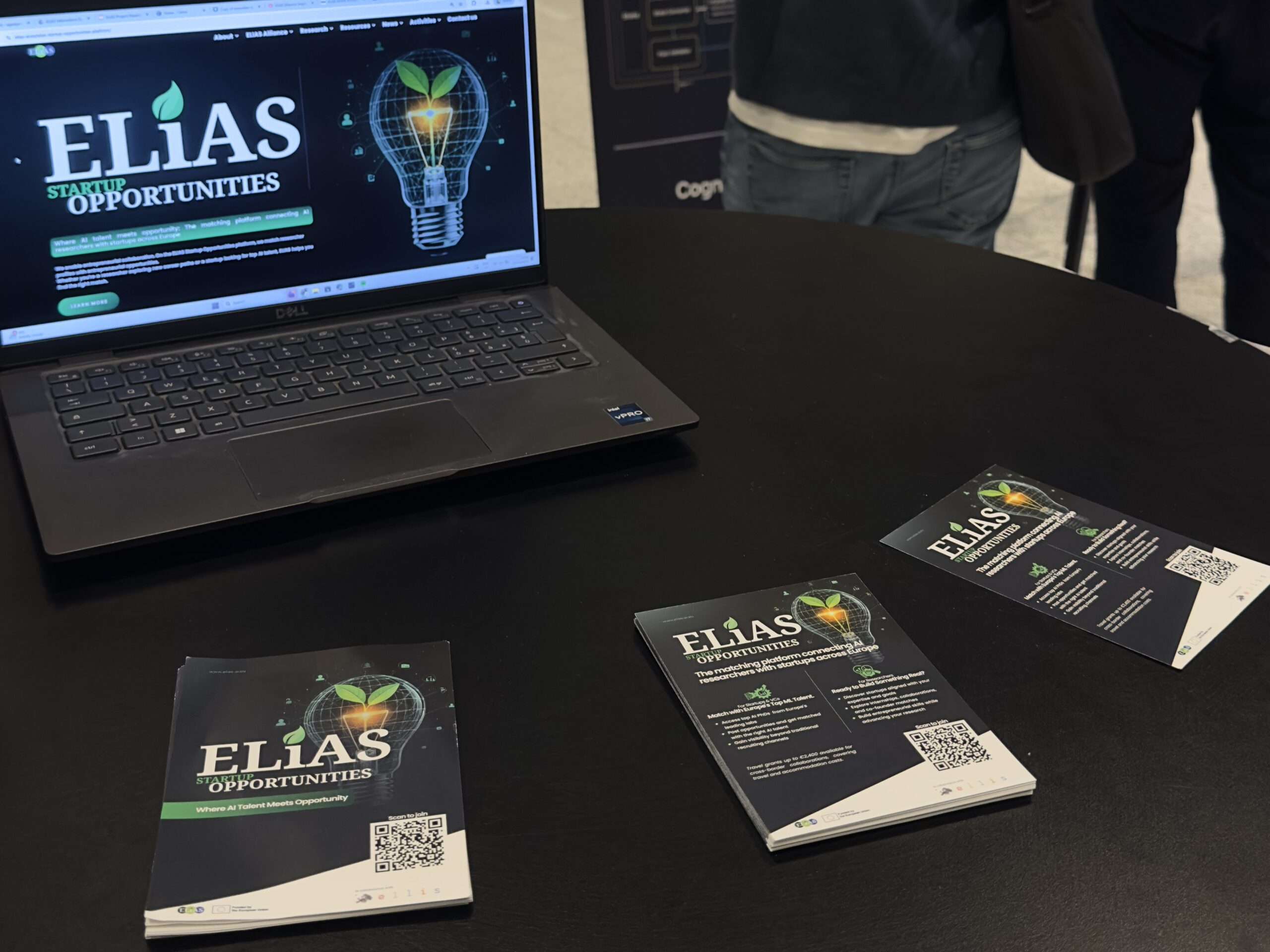

A Visible Presence at the Start-Up Village

From 3–5 December, ELIAS and the ELIAS Alliance hosted a dedicated booth at the EurIPS Start-Up Village, joined by colleagues from the Hasso Plattner Institute and Fondazione Bruno Kessler. Rather than focusing on a single product, the booth emphasized presence, conversation, and connection.

The ELIAS team used the opportunity to introduce the ELIAS Startup Opportunities Platform, a practical bridge between cutting-edge research and entrepreneurial pathways. Conversations ranged from early-stage ideas and research translation to broader questions about how Europe can support responsible AI innovation.

In the fast-paced environment of the Start-Up Village, ELIAS’s presence was less about pitching and more about listening: understanding the needs of startups, the aspirations of young researchers, and the challenges of turning AI research into real-world impact.

Rethinking AI: From Efficiency to Responsibility

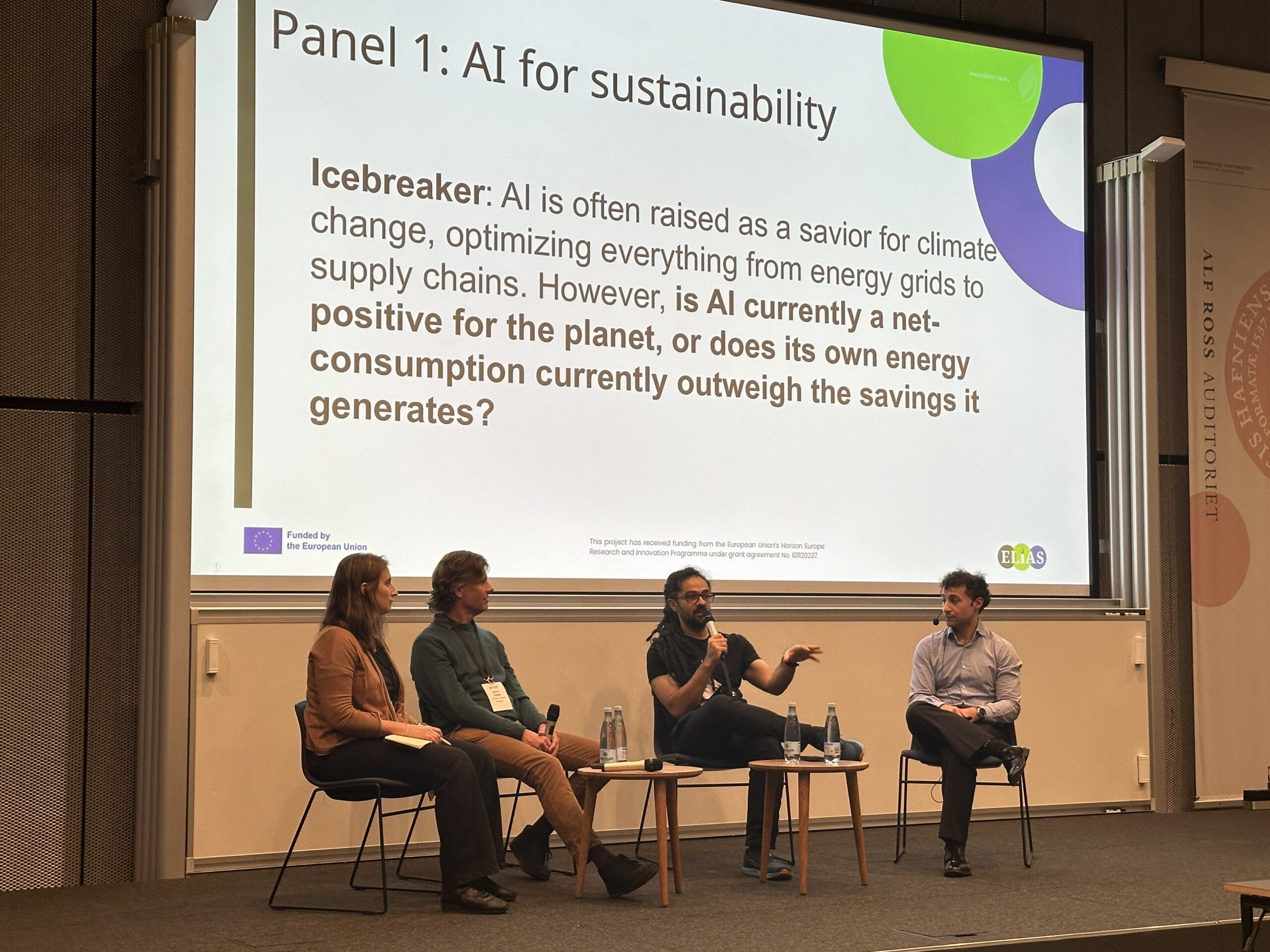

If the Start-Up Village highlighted momentum and opportunity, the Rethinking AI workshop, held on 6 December at the University of Copenhagen, offered a space to pause — and ask harder questions.

As AI systems grow in complexity and scale, their environmental and societal impacts are impossible to ignore. The workshop, co-organized by Quentin Bouniot (TUM / Helmholtz Munich), Florence d’Alché-Buc (Télécom Paris), Enzo Tartaglione (Télécom Paris), and Zeynep Akata (TUM / Helmholtz Munich), was built around two complementary pillars:

-

Sustainability in AI — reducing the ecological footprint of machine learning research and deployment

-

AI for Sustainability — using AI to address urgent environmental and climate challenges

Speakers at the workshop included Loïc Lannelongue (Cambridge Sustainable Computing Lab), Claire Monteleoni (INRIA), Bernd Ensing and Jan-Willem van de Meent (University of Amsterdam), and Sina Samangooei (https://www.cusp.ai/). Over the course of the day, discussions explored the multifaceted challenge of AI and sustainability, blending technical insight with ethical reflection and practical considerations. Several key themes emerged, offering a comprehensive view of both the promise and the responsibility inherent in AI research.

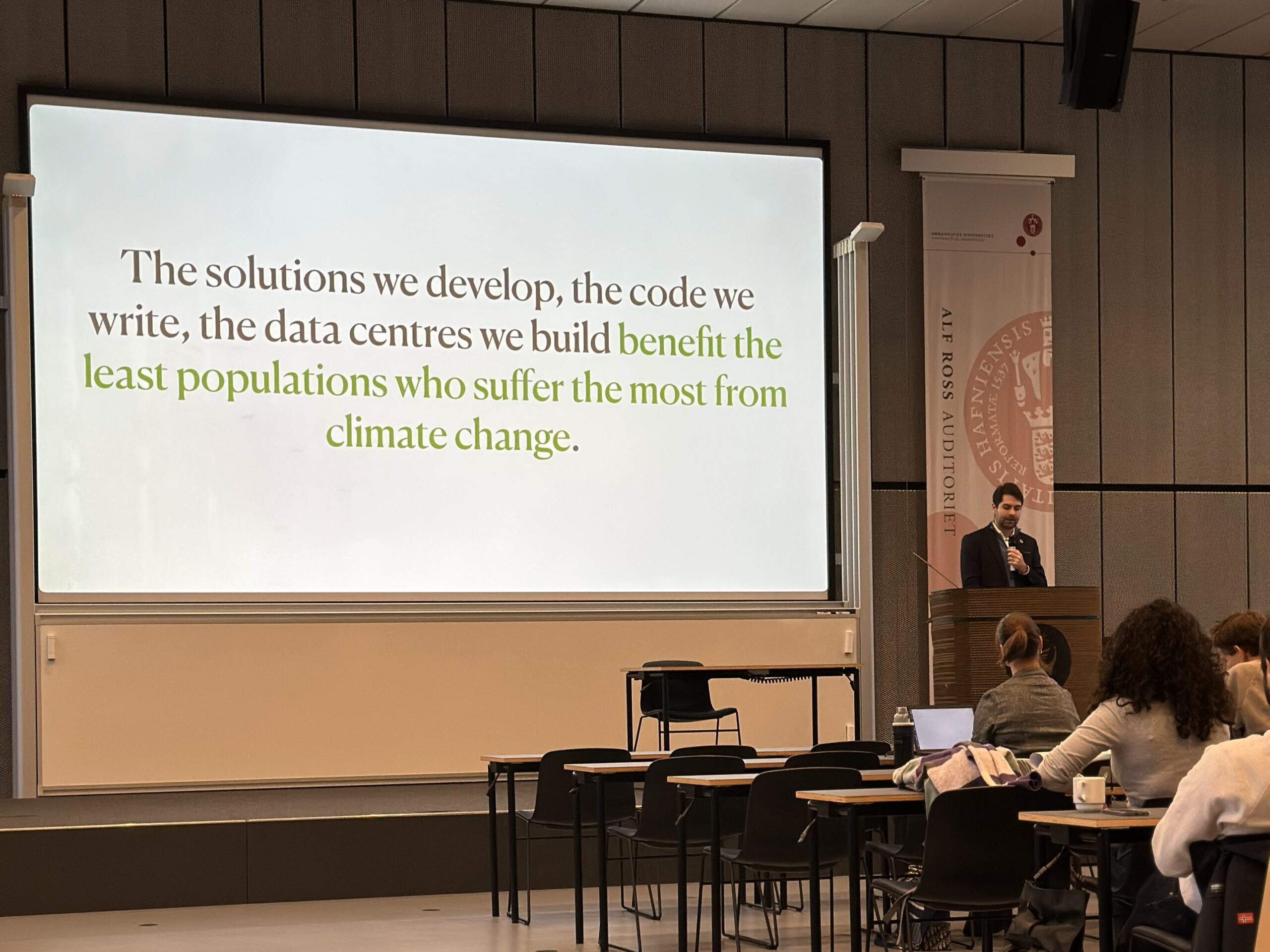

Efficiency is not enough.

A recurring insight was that energy efficiency alone cannot make AI sustainable. As models, algorithms, and data centers become more sophisticated and energy-conscious, overall demand often grows faster than any individual savings. This phenomenon, known as the rebound effect, was highlighted repeatedly. Participants questioned whether building smaller, faster models genuinely reduces environmental impact, or simply redistributes it across devices, applications, and geographies. The conversation underscored a critical point: sustainability is not purely a matter of technical optimisation; it also requires cultural and behavioural change within the research community.

Rethinking computation.

Unlike many traditional sciences, AI researchers are not physically tethered to their equipment. This flexibility opens opportunities for reducing environmental impact that go beyond code and hardware. Simple, yet powerful choices — such as scheduling compute-intensive tasks during periods of low-carbon electricity or running experiments in regions with cleaner energy grids — can meaningfully cut carbon footprints. Cross-institutional collaboration, speakers noted, can further enable access to greener compute, provided such arrangements are equitable and do not replicate extractive practices, especially in low- and middle-income countries. The message was clear: rethinking when, where, and how computation occurs can deliver measurable sustainability gains without requiring entirely new algorithms.

AI for climate and environmental science.

Beyond the visible “demo-ready” applications, AI is quietly transforming climate research. Participants showcased how AI improves predictions for extreme weather events, helps downscale global climate models to actionable local forecasts, and refines long-term projections, such as sea-level rise decades into the future. These contributions may lack immediate visibility, but their implications for policy, infrastructure planning, and disaster preparedness are profound. Importantly, frugal, task-specific models frequently matched the performance of far larger systems, challenging the assumption that bigger always equates to better. Hybrid approaches — combining AI with physical models and large-scale simulators treated as data sources — were highlighted as a particularly effective strategy for navigating different temporal and spatial scales.

Community as infrastructure.

One of the workshop’s most striking insights was the central role of community. True, scalable impact in AI for sustainability rarely stems from individual papers alone; it emerges from interdisciplinary ecosystems. These ecosystems are composed of machine-learning researchers embedded in climate labs, climate scientists acquiring AI expertise, and collaborative projects that gradually evolve into enduring research centers. Workshop participants emphasised that building these networks does not always require large grants or formal programs; personal connections, mentoring relationships, and informal conversations often lay the foundation for long-term progress. The lesson was clear: community is infrastructure — without it, technical innovation alone cannot translate into lasting societal benefit.

Industry collaboration and accountability.

Applied AI research is frequently guided by the concrete performance requirements of industrial partners. While this orientation provides clarity, relevance, and a sense of accountability, it also introduces potential pitfalls if optimization goals are misaligned with broader sustainability objectives. Speakers repeatedly stressed the importance of transparency: without accurate reporting of energy consumption and environmental costs in research papers, funding proposals, and deployments, meaningful evaluation of AI’s trade-offs is impossible. Responsible AI, they argued, requires aligning technical ambition with ethical and environmental responsibility.

Towards responsible AI.

Taken together, the workshop reinforced a powerful, overarching message: AI’s potential can only be harnessed responsibly when efficiency, ethics, and community-building advance together. Reducing energy consumption is necessary but insufficient; progress depends equally on how AI is used, how research communities collaborate, and how stakeholders — from universities to industry — define and pursue sustainability goals. By confronting these tensions head-on, the workshop offered a roadmap not just for smarter AI, but for AI that serves society and the planet in lasting, measurable ways.

Why This Matters for ELIAS

ELIAS’s participation at EurIPS 2025 reflected its broader mission: fostering AI innovation that is responsible, sustainable, and socially grounded.

At the Start-Up Village, this meant supporting opportunity, entrepreneurship, and dialogue. At the Rethinking AI workshop, it meant creating space for critical reflection — acknowledging tensions, trade-offs, and uncertainties rather than offering simplistic answers.

As the AI community continues to grow, these conversations are no longer optional. The challenge ahead is not just to build more powerful systems, but to decide what they are for, how they are used, and at what cost.