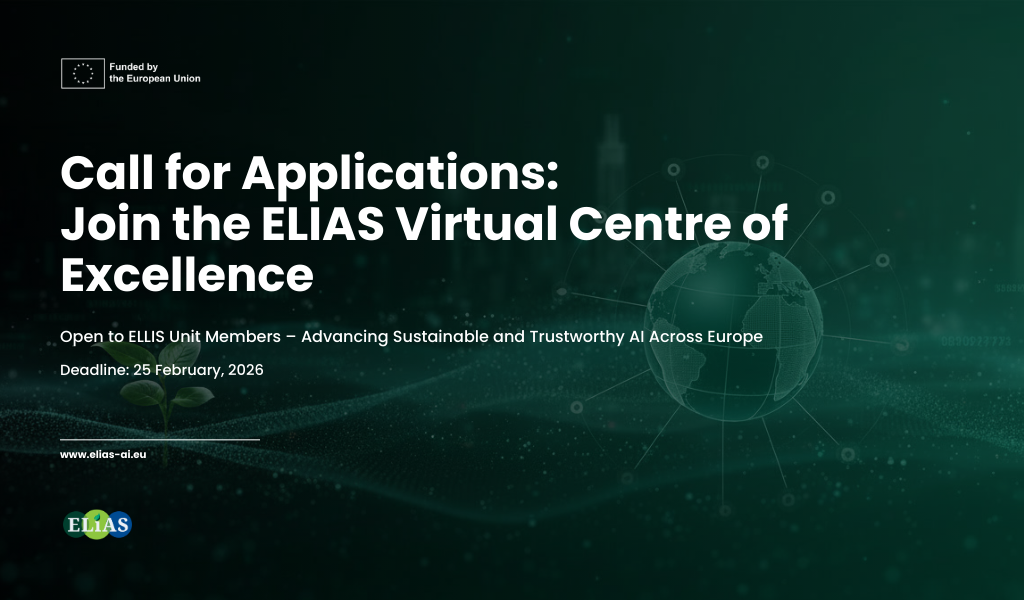

Call for Applications: Join the ELIAS Virtual Centre of Excellence

The European Lighthouse of AI for Sustainability (ELIAS) is expanding its Virtual Centre of Excellence (VCE) in Sustainable and Trustworthy Artificial Intelligence, inviting leading European research institutions to join a growing, interdisciplinary network embedded within the ELLIS ecosystem.

The ELIAS Virtual Centre of Excellence strengthens coordination among Europe’s top AI institutions while extending the reach and impact of ELLIS across the continent. Its expansion follows a transparent, excellence-driven process and places strong emphasis on scientific quality, sustainability, trustworthiness, interdisciplinarity, gender balance, and geographical inclusion, with particular attention to underrepresented regions in Eastern and Southern Europe.

Advancing Sustainable and Trustworthy AI

ELIAS focuses on advancing machine learning and AI research through a Strategic Research Agenda (SRA) structured around three core dimensions of Sustainable AI—the planet, society, and the individual—supported by two cross-cutting enablers:

-

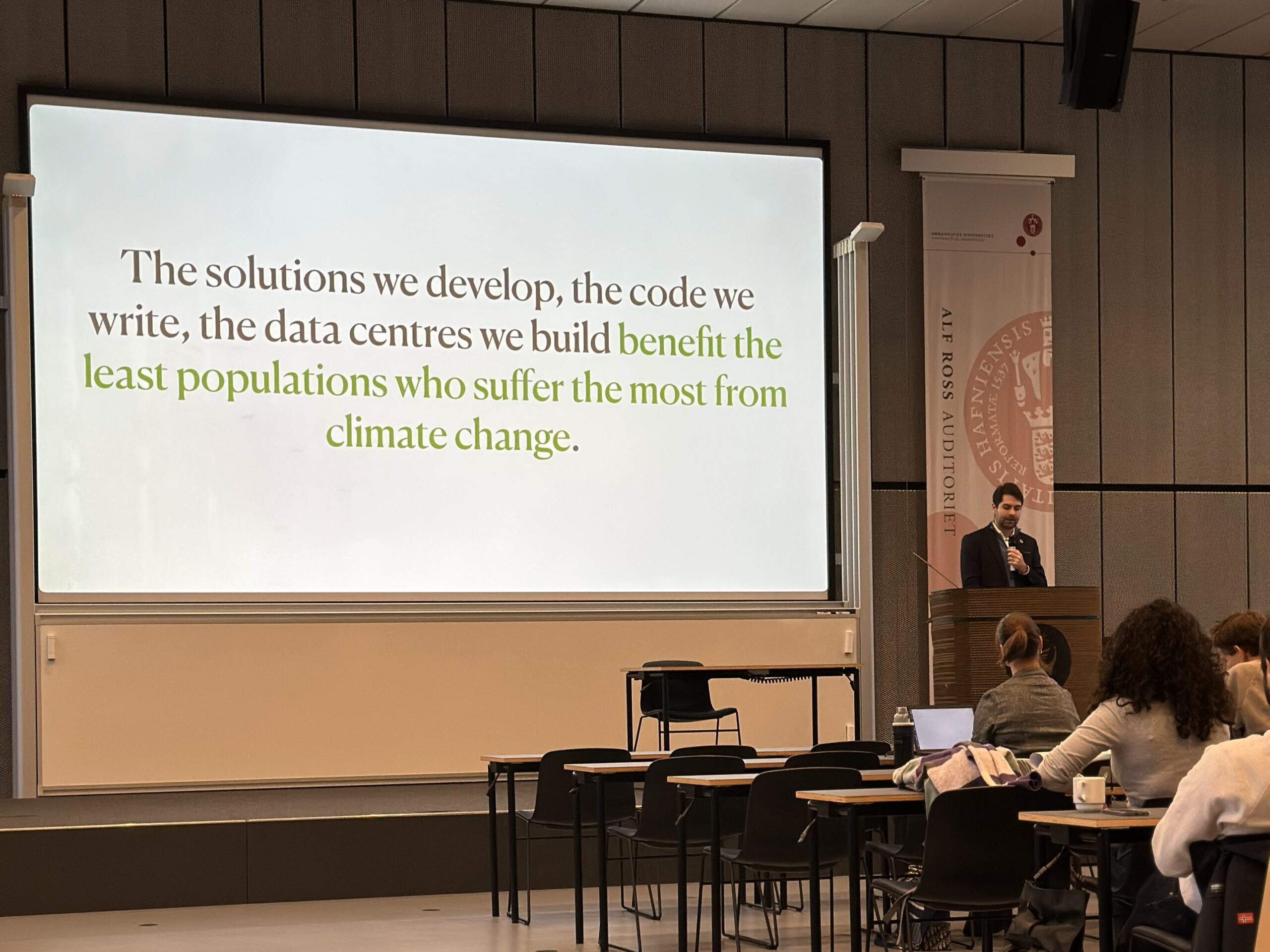

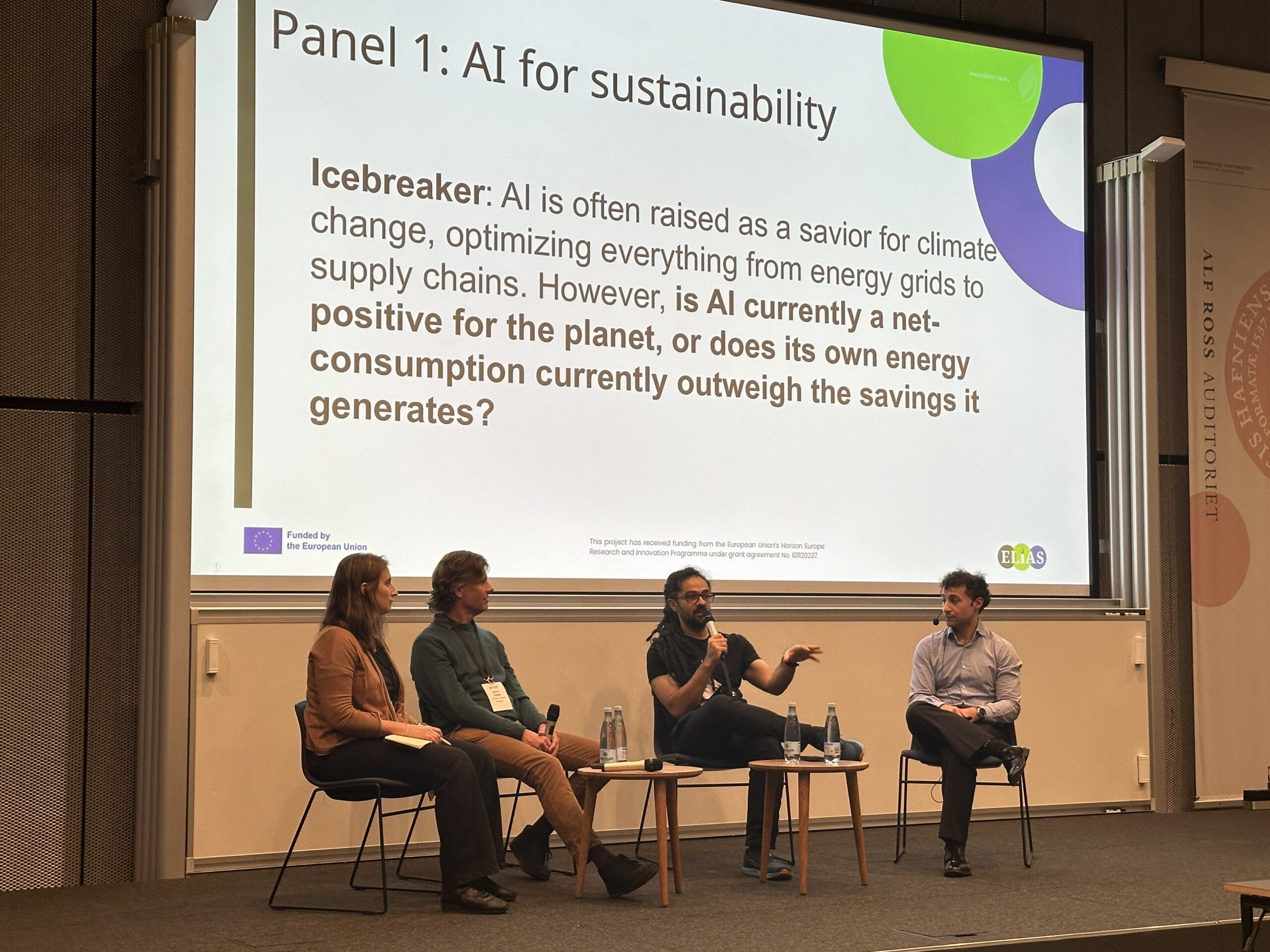

AI for a Sustainable Planet – Hybrid AI models integrating scientific knowledge to support clean energy, sustainable materials, climate resilience, and reduced AI carbon footprint.

-

AI for a Sustainable Society – AI systems to safeguard democracy, counter disinformation, promote inclusive prosperity, and improve shared resource coordination.

-

Trustworthy AI for Individuals – Fair, transparent, privacy-preserving AI attentive to human cognition and diverse needs.

-

Fostering Scientific Excellence – Strengthening Europe’s AI research community through PhD programmes, fellowships, and cross-border collaboration.

-

Entrepreneurship & Tech Transfer (Sciencepreneurship) – Bridging research and real-world impact via open calls, accelerators, internships, and innovation initiatives.

Benefits of Joining the ELIAS VCE:

Institutions joining the ELIAS VCE gain access to:

-

Cutting-edge, interdisciplinary research in Sustainable and Trustworthy AI

-

Collaboration with leading European AI researchers and work package leaders

-

Opportunities for joint publications, projects, and scientific workshops

-

Mobility programs for PhD students and postdoctoral researchers

-

Participation in ELIAS PhD/PostDoc programs and the ELIAS Alliance, supporting AI-driven innovation and entrepreneurship

-

Enhanced visibility and reputation through the ELIAS emblem of excellence

Who Can Apply and How:

Interested institutions are invited to submit:

-

A Letter of Support signed by the institution’s legal representative

The call is open to institutions that are members of an ELLIS Unit. Each application is reviewed for eligibility and evaluated by the ELIAS committee, with final decisions made by the Principal Investigator. Successful applicants will receive an official invitation to join the Virtual Centre, with new members announced through the ELIAS communication channels.

⚠️ Prospective applicants are strongly encouraged to consult the ELIAS Virtual Centre – Guidelines prior to submitting their application.

Important dates:

Application deadline: Friday, 25 February 2026

Fees: No membership fees; institutions cover their own participation costs

Join the ELIAS Community

By expanding its network, ELIAS aims to strengthen Europe’s leadership in high-impact, sustainable, and trustworthy AI—fostering long-term collaboration, innovation, and alignment with European values.

We invite institutions across Europe to become part of the ELIAS Virtual Centre of Excellence and contribute to shaping the future of Sustainable AI.